Building an Advanced Image Search System with Machine Learning and ElasticSearch

click here to read this in medium

In today’s digital world, having a powerful image search system is invaluable. Imagine being able to search for images using other images rather than keywords. This article will guide you through building such an advanced image search system using machine learning techniques. We’ll leverage OpenAI’s CLIP model to process images into feature vectors and Elasticsearch (part of the ELK stack) to store and search these vectors using cosine similarity.

Understanding Image Search with Machine Learning

What is Image Search?

Image search is the process of finding images that are visually similar to a query image. Traditional image search engines rely on metadata or keywords associated with images. However, with advancements in machine learning, we can now search for images based on their visual content.

How Does it Work?

- Feature Extraction: Transform images into feature vectors that capture their visual content.

- Indexing: Store these feature vectors in a database.

- Searching: Compare feature vectors using a similarity metric (e.g., cosine similarity) to find the most similar images.

Our Approach: Using CLIP and Elasticsearch

What is CLIP?

CLIP (Contrastive Language–Image Pre-Training) is a model developed by OpenAI that can understand images and text in a unified manner. It can transform an image into a feature vector that encapsulates the image’s visual content.

Why Elasticsearch?

Elasticsearch is a powerful search engine that supports efficient storage and querying of large datasets. With the k-NN (k-nearest neighbors) feature, Elasticsearch can quickly find similar vectors, making it ideal for our image search system.

Step-by-Step Implementation

Step 1: Set Up the ELK Stack Using Docker

First, we need to set up Elasticsearch and Kibana using Docker.

For setting up the ElasticSearch, Logstash and Kibana. Please clone this repo (https://github.com/propardhu/Docker_ELK_Image_Search) and compose up the setup.

Verify the setup:

Elasticsearch: http://localhost:9200

Kibana: http://localhost:5601

username: elastic

password: changeme

Step 2: Prepare and Index Images Using Python

Next, we will prepare and index images into Elasticsearch using the Oxford Pets dataset.

2.1. Install Dependencies

!pip install torch transformers pillow requests torchvision matplotlib

2.2. Download and Preprocess the Oxford Pets Dataset

import torch

from transformers import CLIPProcessor, CLIPModel

from PIL import Image

import requests

import json

import os

from torchvision import datasets, transforms

from torch.utils.data import DataLoader, Subset

# Load the CLIP model and processor

model = CLIPModel.from_pretrained("openai/clip-vit-base-patch32")

processor = CLIPProcessor.from_pretrained("openai/clip-vit-base-patch32")

# Function to preprocess images and extract features

def extract_features(image):

inputs = processor(images=image, return_tensors="pt")

with torch.no_grad():

image_features = model.get_image_features(**inputs)

image_features = image_features / image_features.norm(dim=-1, keepdim=True)

return image_features.squeeze().tolist()

# Directory to save the dataset

dataset_dir = "./oxford_pets"

# Download and prepare the dataset (using Oxford Pets for demo)

transform = transforms.Compose([transforms.Resize((224, 224)), transforms.ToTensor()])

dataset = datasets.OxfordIIITPet(root=dataset_dir, download=True, transform=transform)

# Take a subset of 100 images

subset_indices = list(range(100))

subset = Subset(dataset, subset_indices)

data_loader = DataLoader(subset, batch_size=1, shuffle=False)

# Ensure there are at least 100 images

assert len(subset) >= 100, "Dataset should contain at least 100 images."

2.3. Index Features in Elasticsearch

# Elasticsearch settings

ES_HOST = "http://localhost:9200"

ES_INDEX = "image-index"

ES_USER = "elastic"

ES_PASS = "changeme"

def index_image(image, label, image_id):

features = extract_features(image)

document = {

"name": f"image_{image_id}",

"label": label,

"vector": features

}

response = requests.post(

f"{ES_HOST}/{ES_INDEX}/_doc/{image_id}",

headers={"Content-Type": "application/json"},

auth=(ES_USER, ES_PASS),

data=json.dumps(document)

)

return response.json()

# Index the images with labels

for i, (image, label) in enumerate(data_loader):

# Convert tensor to PIL image

image = transforms.ToPILImage()(image[0])

label = dataset.classes[label]

result = index_image(image, label, i)

image_path = os.path.join(dataset_dir, f"image_{i}.jpg")

image.save(image_path) # Save the image for later retrieval

print(f"Indexed image {i} with label '{label}': {result}")

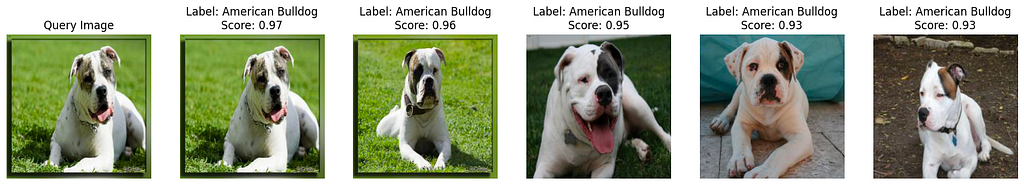

Step 3: Perform Image-to-Image Search and Display Images

3.1. Search for Similar Images

import matplotlib.pyplot as plt

# Function to search for similar images

def search_similar_images(query_image_path, k=5):

query_image = Image.open(query_image_path)

query_features = extract_features(query_image)

search_query = {

"knn": {

"field": "vector",

"query_vector": query_features,

"k": k,

"num_candidates": 100

}

}

response = requests.post(

f"{ES_HOST}/{ES_INDEX}/_knn_search",

headers={"Content-Type": "application/json"},

auth=(ES_USER, ES_PASS),

data=json.dumps(search_query)

)

return response.json()

# Function to display images

def display_images(query_image_path, search_results):

query_image = Image.open(query_image_path)

fig, axes = plt.subplots(1, 6, figsize=(20, 5))

# Display the query image

axes[0].imshow(query_image)

axes[0].set_title("Query Image")

axes[0].axis('off')

# Display the top 5 similar images

for i, hit in enumerate(search_results['hits']['hits']):

image_id = hit['_id']

label = hit['_source']['label']

similar_image_path = os.path.join(dataset_dir, f"image_{image_id}.jpg")

similar_image = Image.open(similar_image_path)

axes[i + 1].imshow(similar_image)

axes[i + 1].set_title(f"Label: {label}\nScore: {hit['_score']:.2f}")

axes[i + 1].axis('off')

plt.show()

# Example search with a query image from the dataset

from random import randint

query_image, _ = subset[randint(1, 100)]

query_image = transforms.ToPILImage()(query_image) # Convert tensor to PIL image

query_image_path = "./query_image.jpg" # Save the query image to this path

query_image.save(query_image_path) # Save the PIL image to the specified path

query_result = search_similar_images(query_image_path)

# Display the results

display_images(query_image_path, query_result)

Note: I have created An flask app to show the search results.

All the code is available at

GitHub - propardhu/Docker_ELK_Image_Search

Conclusion

In this article, we built an advanced image search system using OpenAI’s CLIP model and Elasticsearch. By setting up the ELK stack with Docker, downloading and preparing the Oxford Pets dataset, indexing image features into Elasticsearch, and performing image.